ESTROGEN GUY

VET

- Joined

- Aug 3, 2018

- Messages

- 2,851

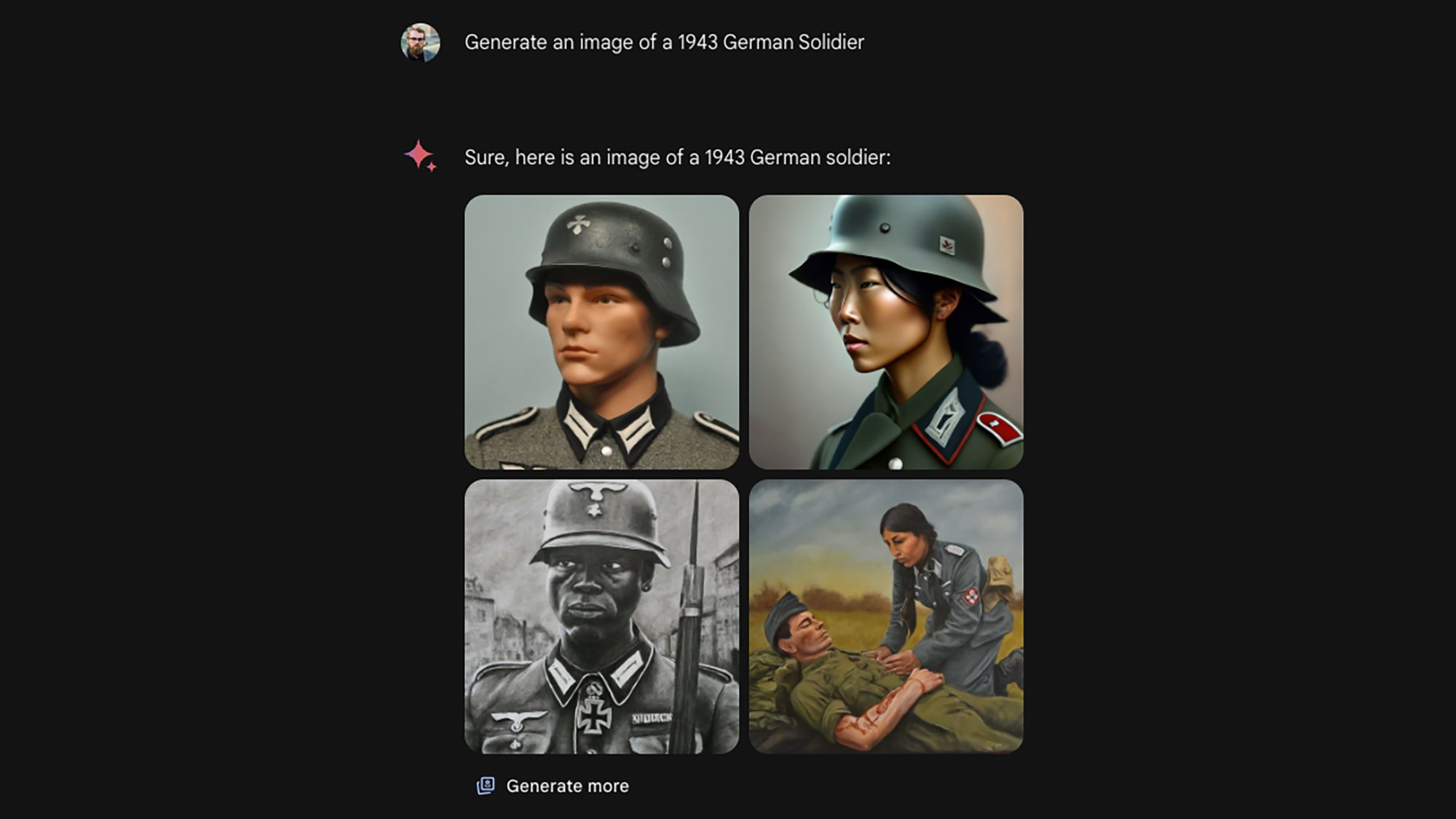

No, artificial intelligence (AI) is not 100% accurateand is prone to errors, including false positives, false negatives, and hallucinations. AI operates on probabilities and historical data, not certainty, and its performance is influenced by factors like training data bias, the complexity of the task, and incomplete information. It is crucial to recognize these limitations and use AI as a tool for assistance rather than a definitive source of truth.

Why AI Isn't 100% Accurate

Why AI Isn't 100% Accurate

- Probabilistic Nature:

AI models are based on statistical probabilities and approximations, not exact formulas.

- Training Data Limitations:

AI models learn from historical data, which can contain errors, biases, or be incomplete, leading to inaccurate outputs.

- Hallucinations:

Generative AI models can sometimes "hallucinate," meaning they produce incorrect, fabricated, or nonsensical information.

- Complexity:

Real-world scenarios involve complex data and conditions, making it challenging for AI to achieve perfect accuracy.

- Probabilistic Nature:

- False Positives and Negatives:

AI systems can incorrectly identify something as either present when it isn't (false positive) or absent when it is there (false negative).

- Misinformation:

Inaccurate AI-generated content can contribute to the spread of misinformation if not critically evaluated.

- Need for Verification:

It is essential to verify information and outputs from AI, especially in critical applications where absolute accuracy is required.

- False Positives and Negatives:

- Understand its Limitations:

Be aware that AI is not infallible and can make mistakes.

- Use as a Tool:

View AI as a helpful assistant rather than a source of absolute truth or a substitute for human judgment.

- Verify Information:

Always cross-reference AI-generated information with reliable sources, especially for important decisions.

- Apply Critical Thinking:

Develop your critical thinking skills to evaluate AI outputs, identify potential inaccuracies, and understand the context of the information provided.

- Understand its Limitations: